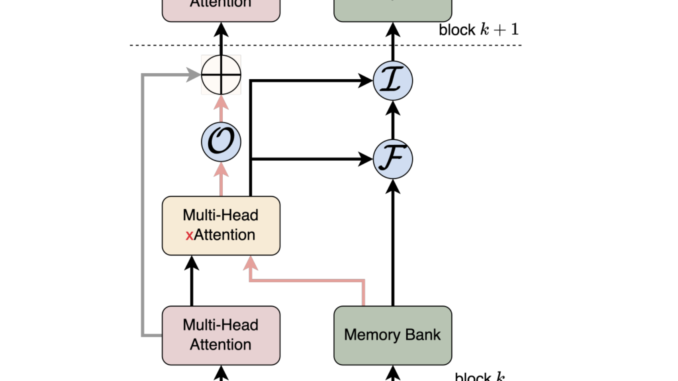

Convergence Labs Introduces the Large Memory Model (LM2): A Memory-Augmented Transformer Architecture Designed to Address Long Context Reasoning Challenges

Transformer-based models have significantly advanced natural language processing (NLP), excelling in various tasks. However, they struggle with reasoning over long contexts, multi-step inference, and numerical reasoning. These challenges arise from their quadratic complexity in self-attention, […]