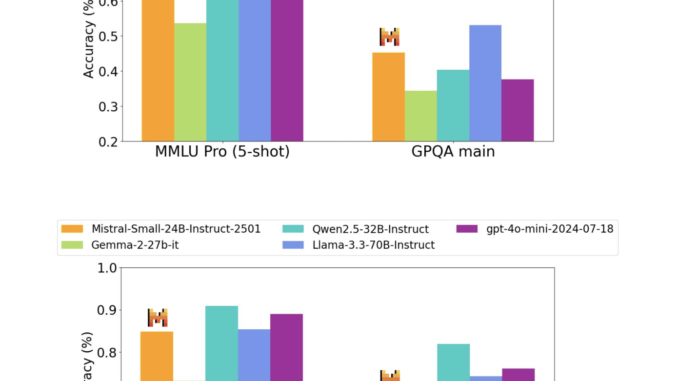

Mistral AI Releases the Mistral-Small-24B-Instruct-2501: A Latency-Optimized 24B-Parameter Model Released Under the Apache 2.0 License

Developing compact yet high-performing language models remains a significant challenge in artificial intelligence. Large-scale models often require extensive computational resources, making them inaccessible for many users and organizations with limited hardware capabilities. Additionally, there is […]